Not Only Annoying but Dangerous

How to co-design social media for photosensitive users?

✍️ Published at ACM Conference ASSETS'24

Roles

UX research, UI design, co-authored and co-presented a technical paper

Timeline

Sep 2023-Apr 2024

Tools

Paper Prototyping, Qualtrics & Figma

Team

Laila Dodhy, Rua Mae Williams, Chorong Park, and other CoLiberation Lab members

TLDR

Goal

The main goal is to create a more inclusive and safer online experience for photosensitive users. The project aims to combat the social isolation many experience by proposing user-centered design solutions that address the inherent dangers of social media platforms.

Problem

Millions of people with photosensitivity—a condition where flashing lights and patterns can trigger adverse reactions like seizures—face significant dangers on social media. Autoplaying videos, GIFs, and other user-generated content frequently contain flashing elements.

Current protections, such as platform warnings or system-level settings, are often insufficient, inconsistent, or nonexistent, leaving the burden of safety on the user. Additionally, malicious targeting and ineffective reporting mechanisms further exacerbate the problem.

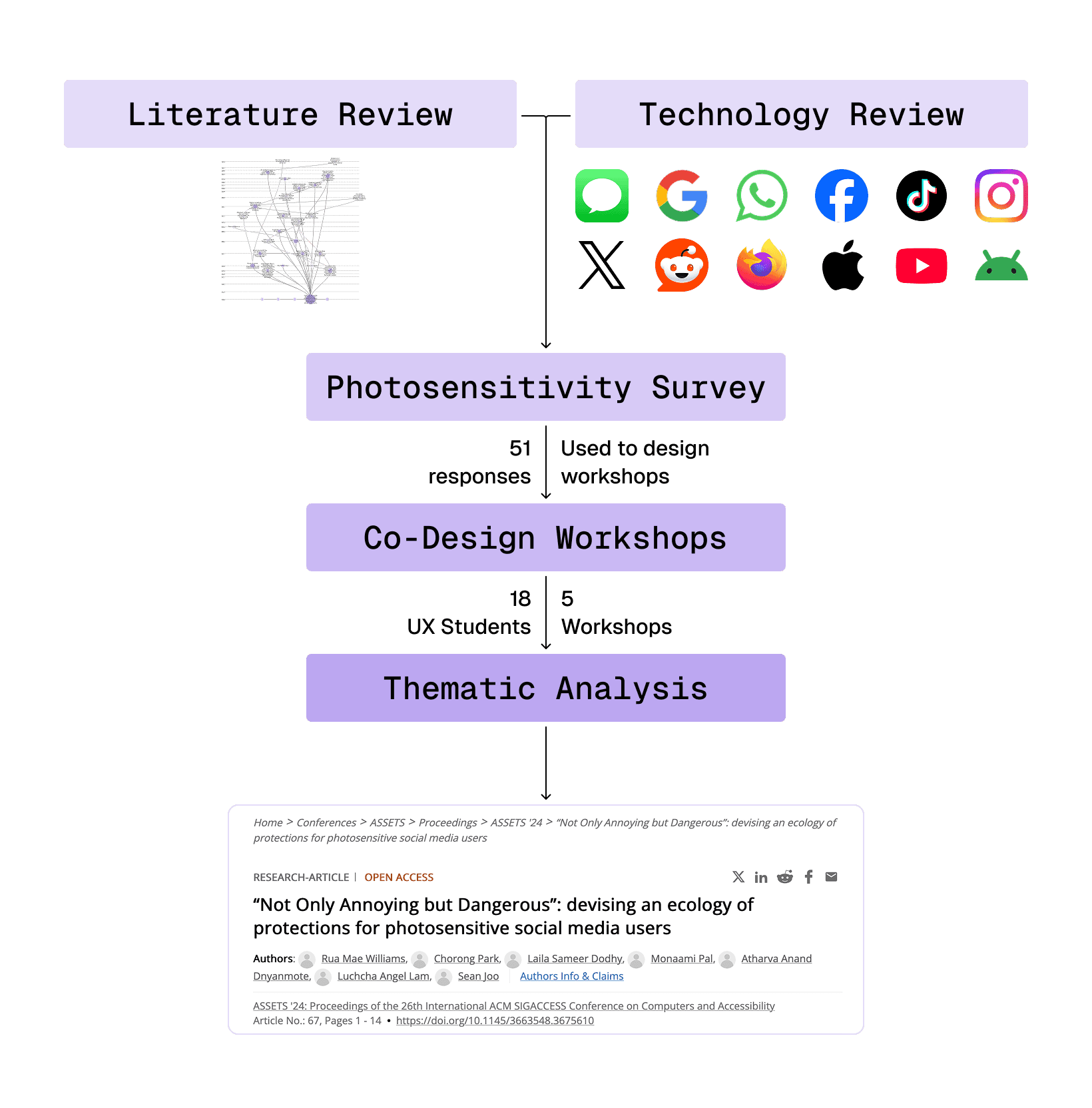

Methodology

Solutions

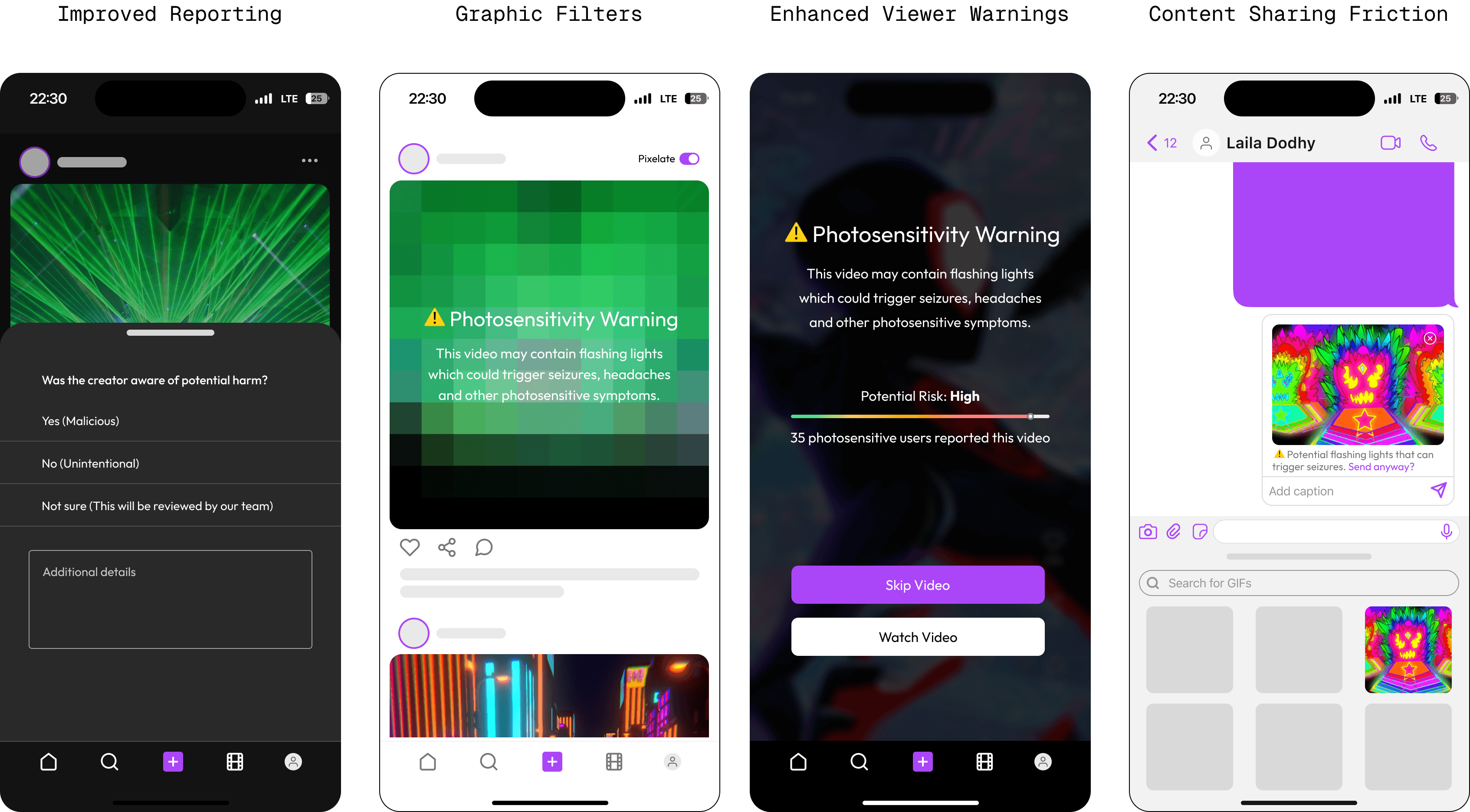

The project proposes a multi-layered suite of solutions to be implemented at various levels:

Improved Reporting: A new reporting mechanism with specific categories for photosensitivity triggers (e.g., strobes, flashing lights) that allows users to indicate if the content was malicious.

Graphic Filter: A real-time, system-level graphic filter that operates across all apps to detect and alter dangerous pixel changes, smoothing out flashes to prevent triggering content.

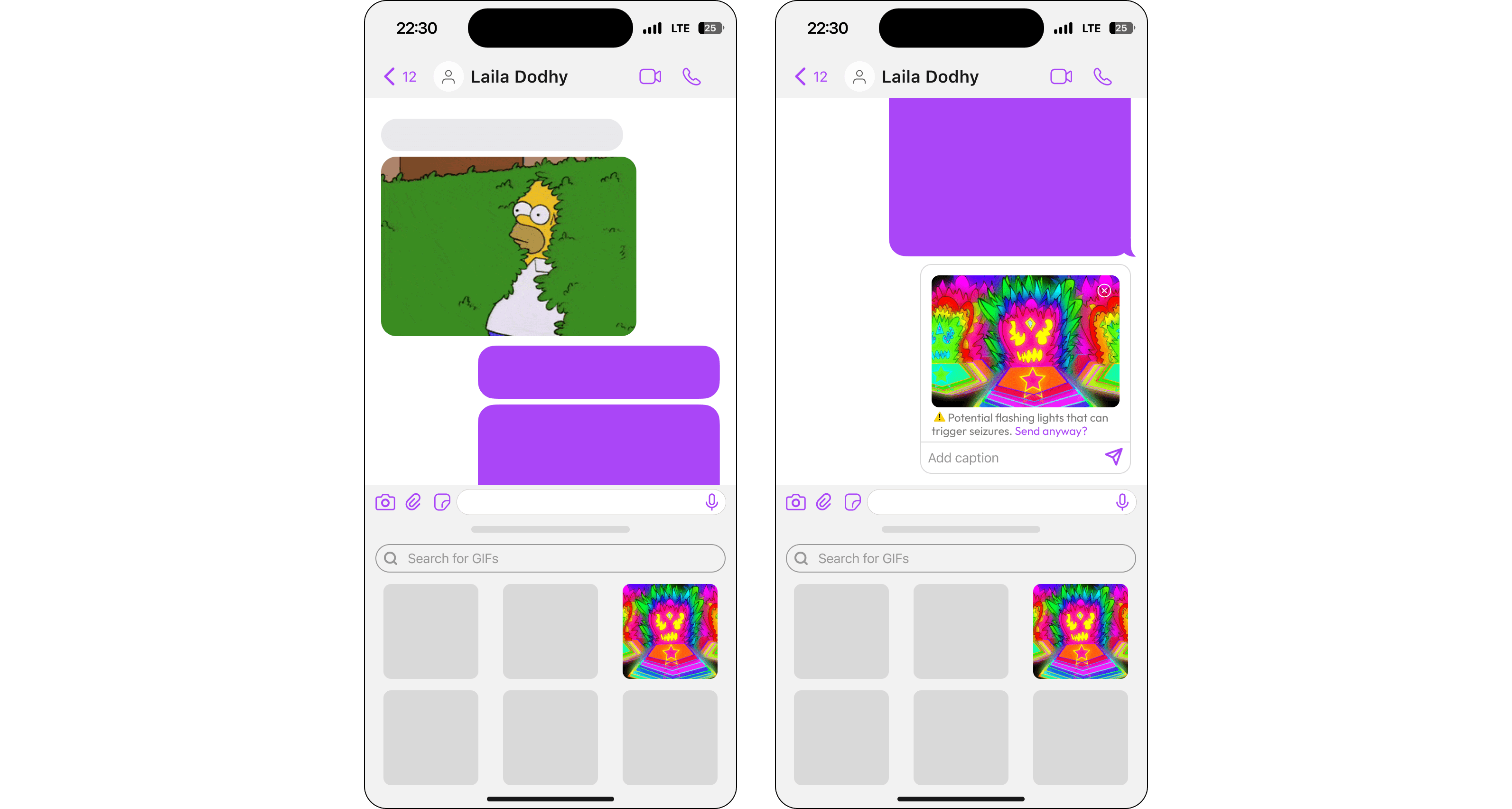

Content Sharing Friction: Adding "friction," like a warning modal, before a user sends a flashing GIF to encourage mindful sharing and promote creator responsibility.

Enhanced Viewer Warnings: An overlay warning on short-form video content that provides a crowdsourced risk level and allows users to either "View Anyway" or "Skip."

Impact

The key impact is a research paper titled "Not Only Annoying but Dangerous" that was published at ACM ASSETS '24. It is an accessibility conference sponsored by Google, Meta, Microsoft, and Apple and attended by researchers from those platforms.

The paper provides a foundational understanding of the challenges faced by photosensitive users and offers practical, user-centered design interventions.

The findings and proposed solutions serve as a blueprint for platforms and developers to create safer and more accessible online environments, shifting the responsibility of safety from the user to the platforms themselves.

For more details about the study, you can continue scrolling.

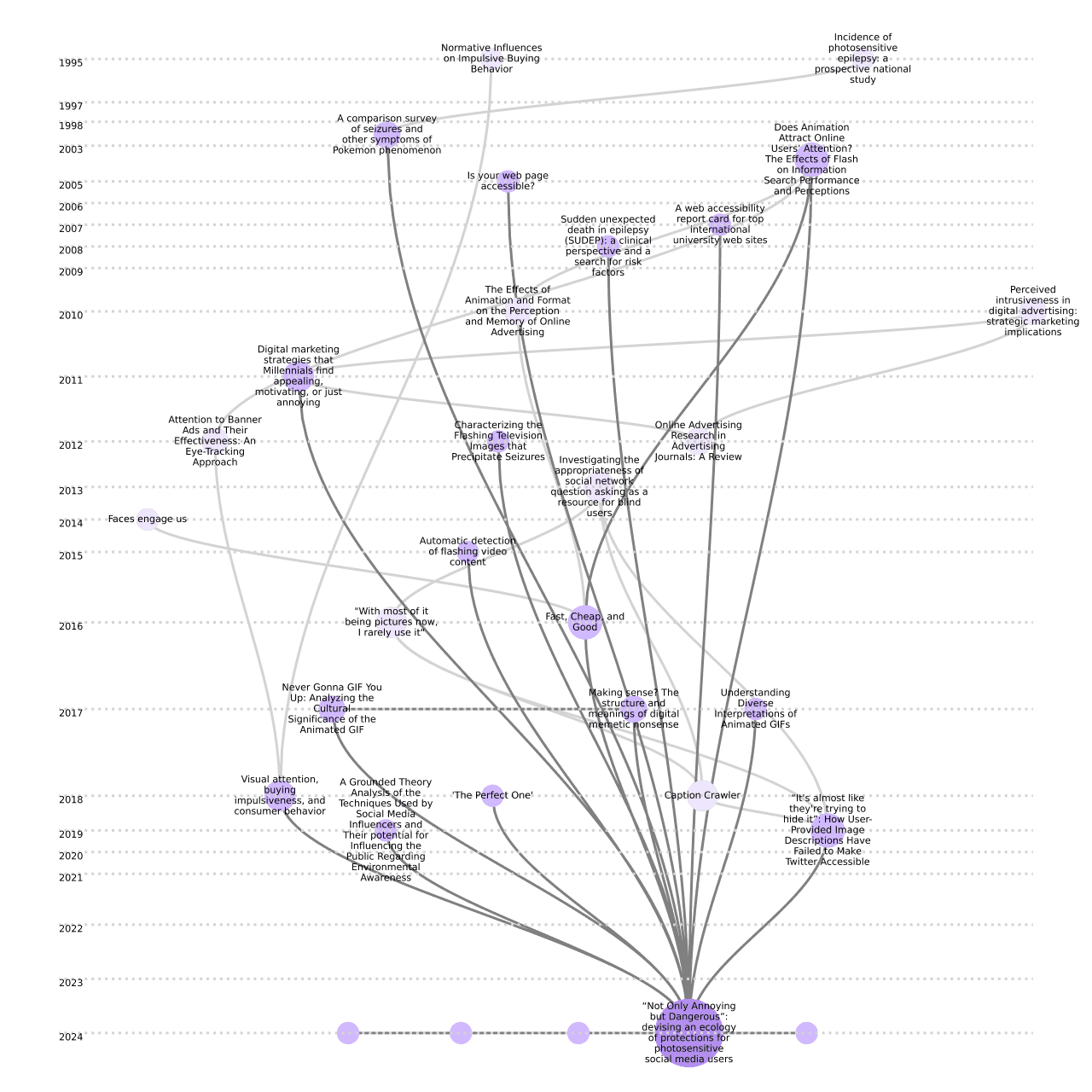

Secondary Research

Citation Tree

Key Findings

FINDING 1 | FINDING 2 | FINDING 3 |

|---|---|---|

Photosensitivity is not widely understood | Various triggers in digital content | Existing laws and guidelines are lacking |

Most people are unaware of the forms of photosensitivity, which can range from photophobia (causing migraines, nausea, etc.) to photosensitive epilepsy (potentially leading to seizures and even death). | Flashing content isn't limited to videos or GIFs; it can also be created through basic web technologies (HTML, CSS, JavaScript). The Pokémon incident highlighted the potential for widespread harm. | Existing guidelines like WCAG are insufficient to address photosensitivity, as they are limited in scope. Additionally, legal actions like Zach's Law are too new and restricted to effectively solve the problem. |

FINDING 4 | FINDING 5 | FINDING 6 |

|---|---|---|

Limited system and platform safeguards | Content creators do not take responsibility | Support usually comes from the community |

System-level features like dark mode and reduced motion, along with platform-specific warnings and user plugins, are inconsistent and not comprehensive enough to fully protect users from flashing content. | Because platforms and creators prioritize engagement and revenue, they often use flashing content and lack clear safety standards, enforcement, and effective reporting tools. | Relying on informal, community-based warnings from friends and family highlights the need for more formal systems to help photosensitive users identify and tag dangerous content. |

Primary Research

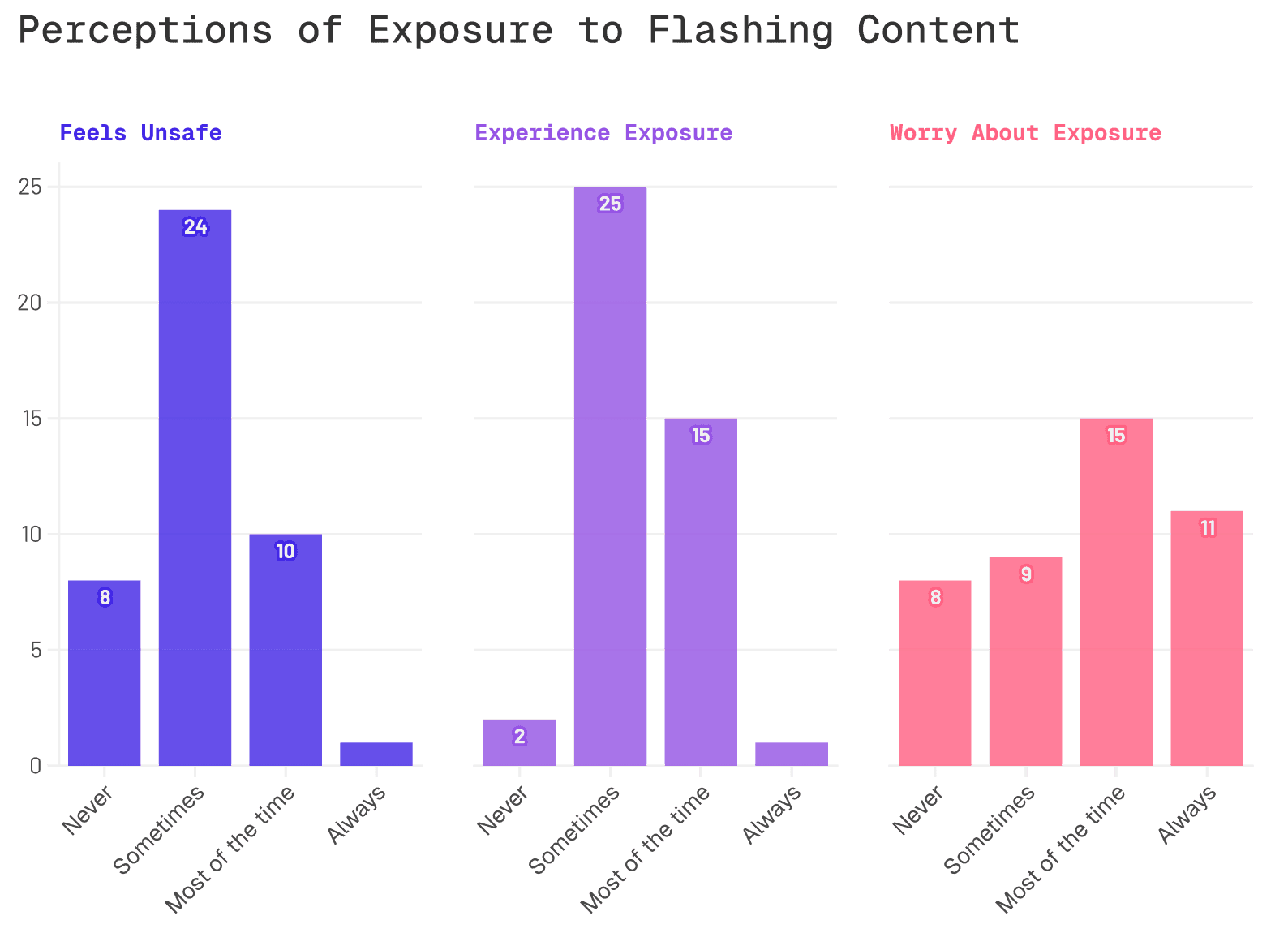

Survey

Distributed through social media and disability networks, our survey of 38 photosensitive respondents provided a broad quantitative understanding of the problem. Though small, the sample reflects the challenge of reaching this rare group, many of whom avoid social media due to safety concerns. The survey explored user demographics, exposure frequency, and coping strategies.

Key Findings

"Some friends add content warnings for flashing graphics" (P40).

Pervasive worry and frequent exposure to flashing content | Limited safeguard feature awareness and effectiveness | Fear of being intentionally attacked with flashing content |

|---|---|---|

Ineffective reporting options and reports are dismissed | Mitigation strategies include screen dimmers, filters, and dark mode | Heavily relied on their community to pre-screen content |

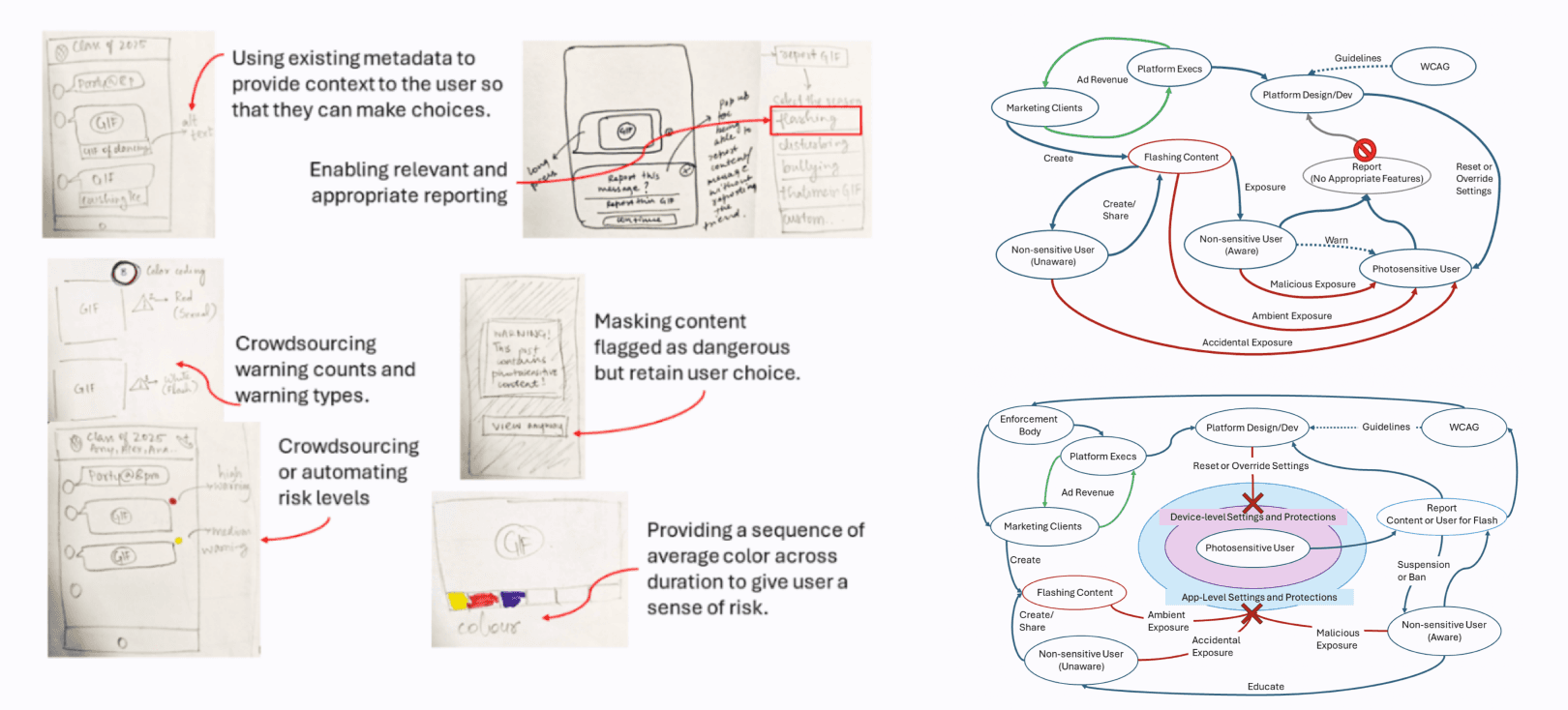

Co-design Workshops

Our co-design workshops with UX design students, some of whom self-identified as photosensitive, provided valuable qualitative insights. These firsthand perspectives enriched the discussions and ensured the proposed solutions were grounded in lived experience.

Activities

Analysis

The research team analyzed workshop materials by having each member review all papers from every activity, taking personal observation notes, and then collectively identifying common themes and unique ideas to propose feasible new features and policies.

Key Features Identified

Universal graphics filters, customizable sensitivity settings | Enhanced reporting, specific tags, and community warnings | Intelligent filters, warnings, and interaction gates |

|---|---|---|

Creators should warn and educate, with consequences for intentional targeting | Community-driven solutions, such as crowdsourced warnings | Granular customization of photosensitivity settings |

Proposed Solutions

Based on research and workshops, a multi-layered system of protections is proposed to address photosensitivity through policy, design, and community-driven features.

Of the 21 features identified in the workshops, only a select few of the most frequently noted ones are presented here for the sake of brevity.

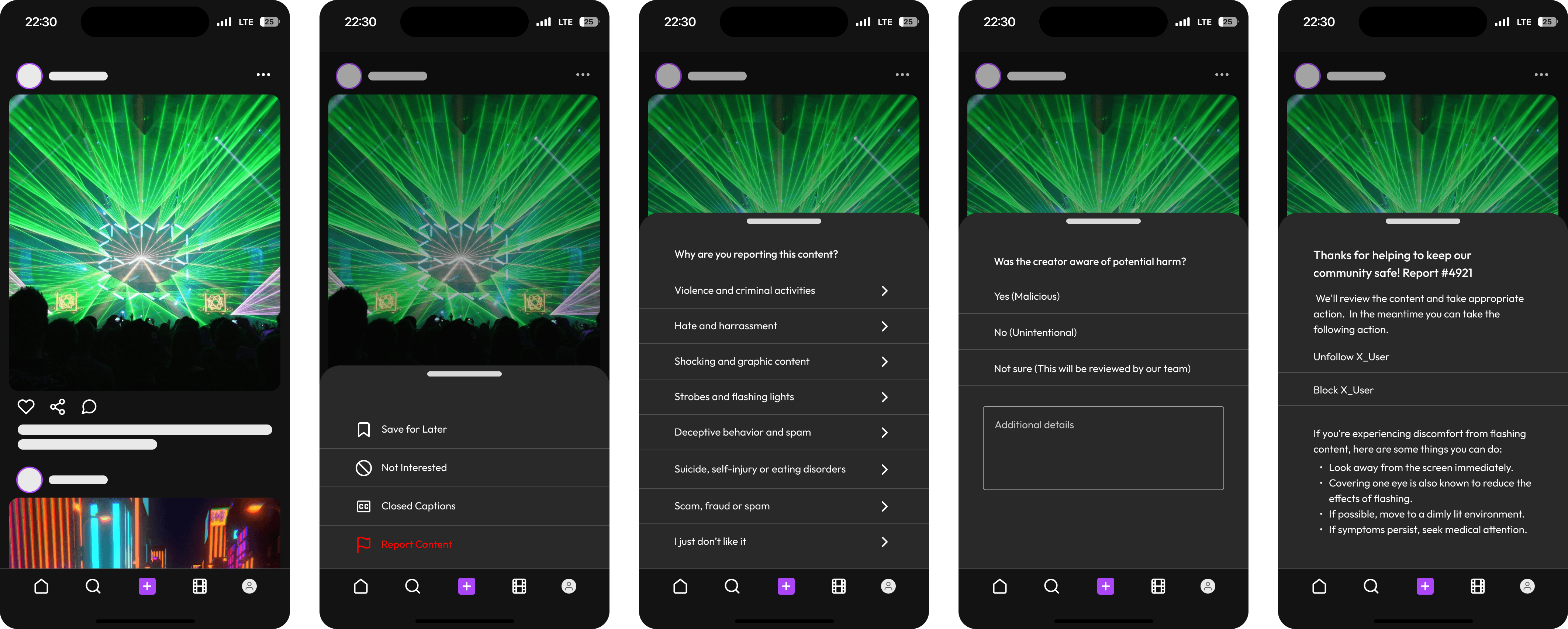

Detailed Reporting Features

Current reporting systems for photosensitive content are inadequate because they lack specific categories and fail to distinguish between intent and accident. The proposed solution would add distinct options for reporting flashing content, allowing users to provide more context and receive immediate relief actions. Although this approach faces challenges in enforcement and resource management, existing content moderation systems show that handling large-scale reports is feasible.

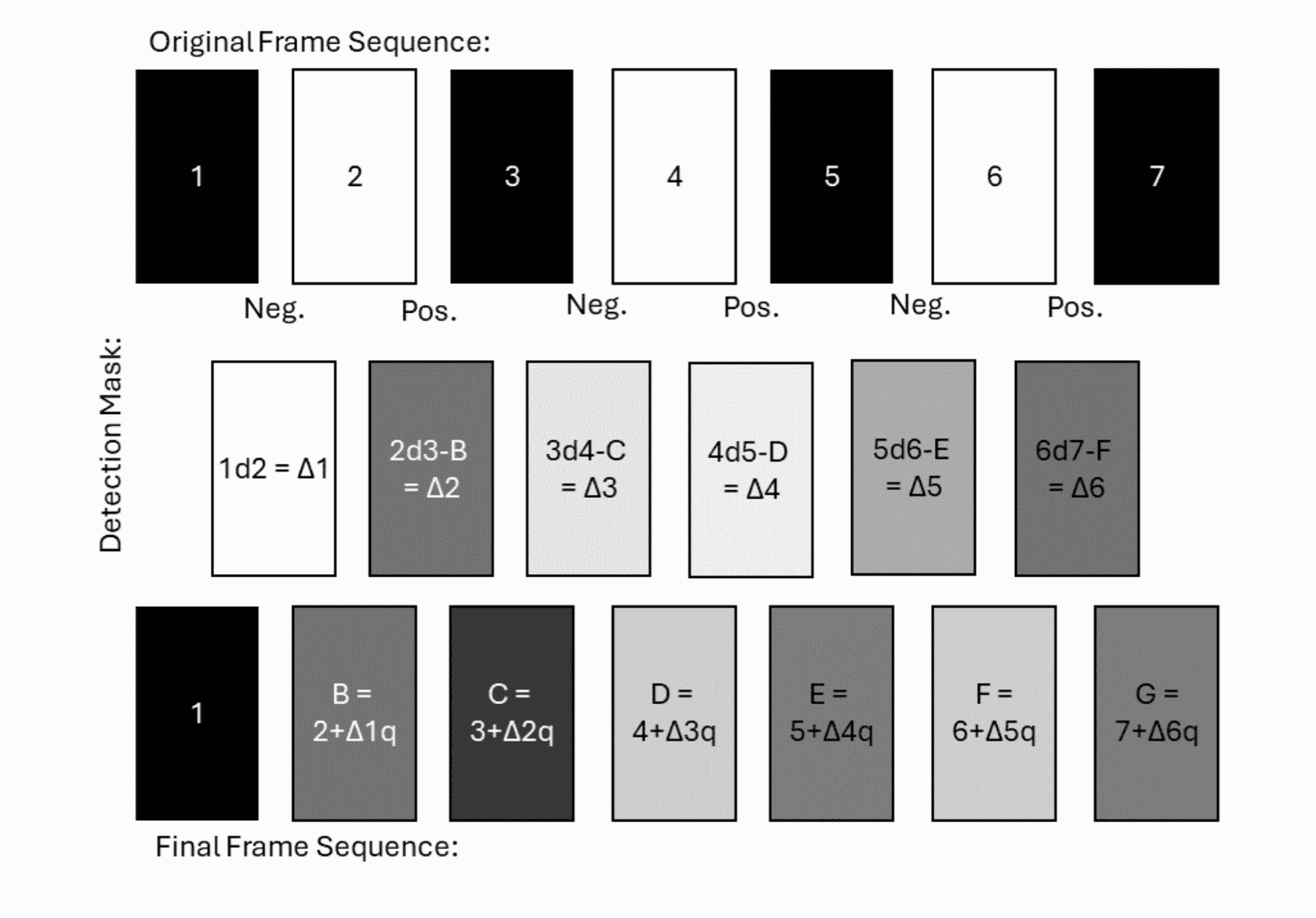

Intelligent Filters

Participants proposed two solutions for photosensitivity: system-wide autoplay controls that override app settings, and a full-screen graphic filter. These features are necessary because existing, browser-based tools fail to cover app-based social media, where much of the triggering content is found.

Full-screen System-level Graphic Filter

We proposed a system-level filter that directly alters screen pixels to prevent photosensitivity triggers. It uses a detection algorithm to identify rapid changes in brightness and color between frames, then applies a filter to smooth transitions or replace the flashing areas with a solid color.

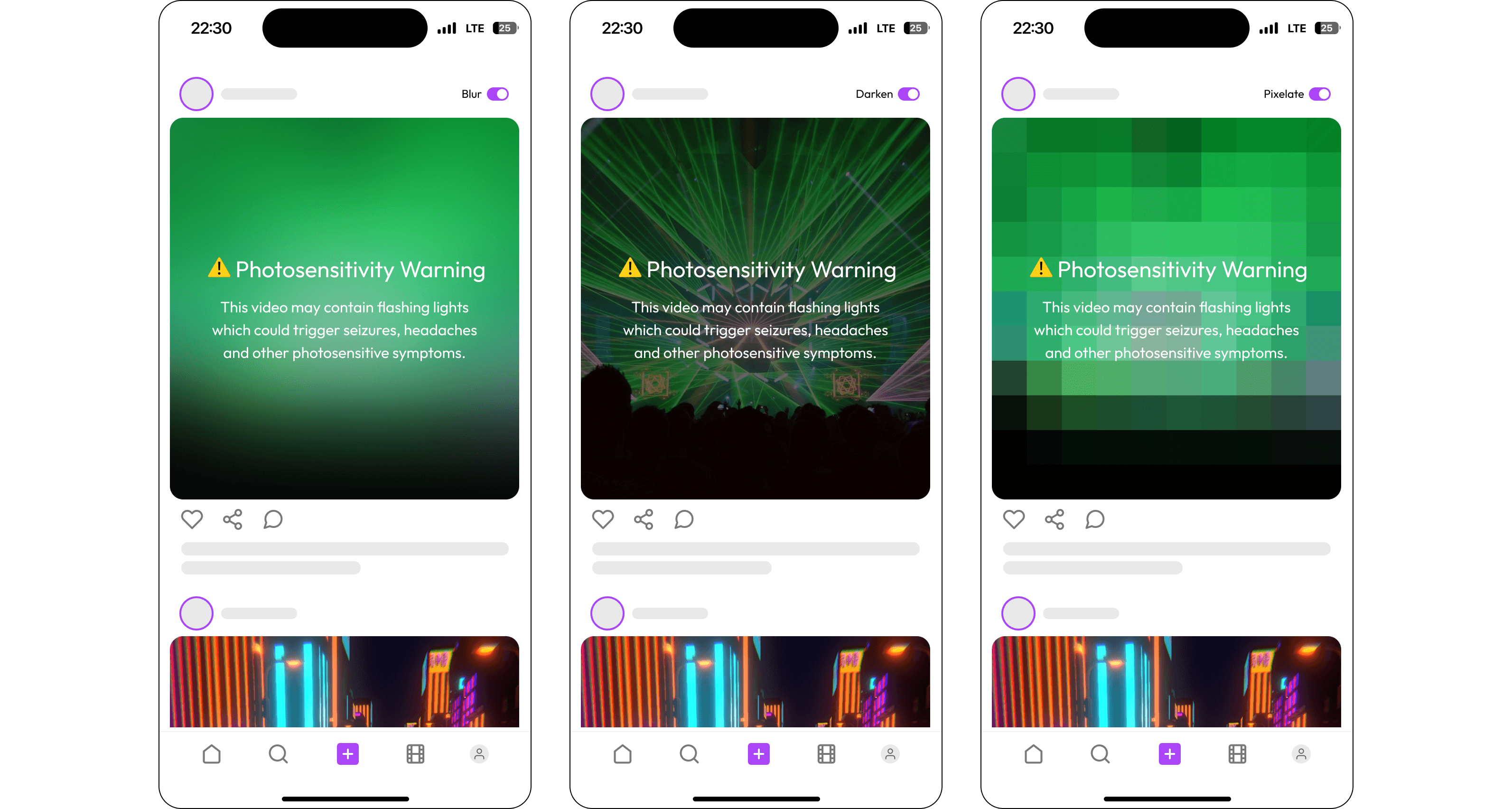

Customizable App Filters

Workshops repeatedly highlighted the need for more customizable, user-controlled filters that can blur, dim, or pixelate content. These filters would use a combination of community tags and user reports to identify triggering material. The discussion also emphasized the importance of educating users about photosensitivity since many may not recognize their own symptoms.

Encouraging Thoughtful Sharing

To address creator responsibility, this feature adds a warning modal to prevent accidental sharing of harmful GIFs, particularly in smaller online communities. Before sending a flashing GIF, the sender sees a pop-up that encourages them to be mindful of its potential impact on others, but they can still choose to send it. This approach gives creators a final chance to protect their community.

Viewer-End Warning Level

Movies like Spider-Man: Into the Spider-Verse highlight the risk of photosensitive content spreading across social media.

To address this, a warning overlay is proposed for videos. This modal would appear before playback, show a crowdsourced risk level based on user reports, and give the user options to "View Anyway" or "Skip," empowering them to make an informed decision based on their own sensitivity.

Conclusion

Our research reveals a concerning dissonance. Social media, intended for connection, can inadvertently isolate photosensitive users. This is due to inadequate platform features and the prevalence of harmful flashing content, particularly in short-form videos. This leaves users feeling worried and vulnerable.

To address this, we propose a range of solutions visualized across diverse digital contexts—social media feeds, direct messages, GIF databases, and short-form content platforms. I created these visualizations to demonstrate the various ways users encounter flashing content and illustrate how our proposed designs offer feasible improvements.

While further evaluation with photosensitive users is crucial, this research is grounded in the lived experiences of both photosensitive and non-photosensitive users, gathered through co-design workshops that fostered a community-driven approach. We believe that implementing the proposed solutions and prioritizing user well-being can lead to a more accessible online environment.

The full research paper titled “Not Only Annoying but Dangerous”: devising an ecology of protections for photosensitive social media users can be read here.